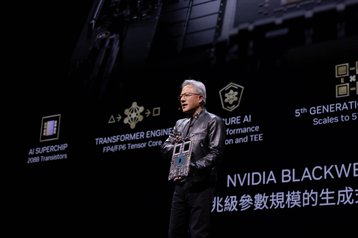

Nvidia CEO Jensen Huang used his keynote speech at Computex in Taiwan to unveil the company’s upcoming semiconductor roadmap which will see a new product family launched every year.

He also announced that the successor to Nvidia’s Blackwell chip architecture is called Rubin and will be available from 2026.

While very few technical details about the chips were provided, Huang did reveal that the Rubin portfolio will include new GPUs, a new Arm-based CPU called Vera, and advanced networking chips containing the X1600 converged InfiniBand/Ethernet switch.

“Our company has a one-year rhythm. Our basic philosophy is very simple: build the entire data center scale, disaggregate and sell to you parts on a one-year rhythm, and push everything to technology limits,” Huang explained during his keynote speech.

In addition to moving up its GPU release cycle from every two years to annually, Huang also announced that Nvidia would be accelerating its networking product release schedule, with the company now planning to launch new Spectrum-X products every year.

Debuted in May 2023, Spectrum-X is an accelerated networking platform for generative AI workloads and was claimed by Nvidia to be the first Ethernet offering that’s purpose-built for AI. The company says it accelerates generative AI network performance by 1.6x over traditional Ethernet fabrics.

In a statement, Nvidia said that each new release would deliver “increased bandwidth and ports,” in addition to “enhanced software feature sets and programmability” to improve AI Ethernet networking performance.

“Generative AI is reshaping industries and opening new opportunities for innovation and growth,” Huang told event attendees. “Today, we’re at the cusp of a major shift in computing… the intersection of AI and accelerated computing is set to redefine the future.”

AI factories and accelerated computing

Elsewhere in his two-hour-long keynote, Huang revealed that companies including Asus, Ingrasys, Inventec, QCT, Supermicro, and Wiwynn were partnering with Nvidia to deliver what he called AI factories.

Powered by the company’s Blackwell architecture, these so-called factories consist of AI systems for cloud, on-premises, embedded, and edge applications, with offerings ranging from single to multi-GPUs, x86- to Grace-based processors, and air- to liquid-cooling technology.

Huang also used his speech to launch the new GB200 NVL2 platform for Nvidia MGX. Designed to bring generative AI capabilities to the data center and consisting of two Blackwell GPUs and two Grace GPUs, the platform will offer 40 petaflops of AI performance across 144 Arm Neoverse CPU cores and 1.3 terabytes of memory.

Nvidia said the platform delivers an “unparalleled performance,” providing 5x faster LLM inferencing, 9x faster vector database search, and 18x faster data processing when compared to traditional CPU and GPU offerings.

“The next industrial revolution has begun. Companies and countries are partnering with NVIDIA to shift the trillion-dollar traditional data centers to accelerated computing and build a new type of data center — AI factories — to produce a new commodity: artificial intelligence,” said Huang. “From server, networking, and infrastructure manufacturers to software developers, the whole industry is gearing up for Blackwell to accelerate AI-powered innovation for every field.”