GPU cloud provider Ionstream has added Nvidia B200s to its offering.

The company announced last week via a LinkedIn post that it had successfully deployed Nvidia's Blackwell B200 GPUs, making them available via Ionstream's GPU cloud.

The GPUs offer 12x better energy efficiency than the Nvidia H100 and A100s, and have 192GB of HBM3e per GPU with 8TBps of bandwidth. The B200s are connected with 5th-gen NVLink & NVSwitch for multi-GPU scaling, and are offered on-demand from $2.40 per hour.

In a separate LinkedIn post, Ionstream's founder and CEO Jeff Hinkle wrote: "Businesses using AI are aware of its fast-moving pace and the highly competitive environment that is evolving daily. To stay ahead of your competitors means staying current with technology - especially your core GPU platform.

"I’m really excited to announce that Ionstream is an early adopter of the Nvidia HGX B200 and has machines ready to deploy today. With 3x faster training, 15x faster inference, and up to 12x greater energy efficiency than the previous generation, like the H100 and the A100. The Nvidia B200 GPU delivers the increased cost/performance ratio you need to stay ahead of your competition."

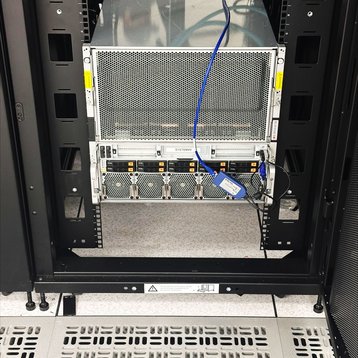

The company is using servers from Supermicro for the deployment. A video of the B200 in action at Ionstream can be seen here.

Ionstream was founded by Hinkle in 2023 and is headquartered in Spring, Texas. The company offers access to Nvidia's L40s, B200, and H200 GPUs, and the AMD Instinct MI300X. The company does not provide information about its data center footprint.

DCD has reached out for more information about where it hosts its GPU cloud.

More in AI & Analytics

-

-

Sponsored The age of exascale

-