Google Cloud is now offering VMs with Nvidia H100s in smaller machine types.

The cloud company revealed on January 25 that its A3 High VMs with H100 GPUs would be available in configurations with one, two, four, or eight H100s.

This month has also seen the launch of Nvidia Blackwell-powered VMs at Google.

The move will enable customers to select their compute needs with more granularity, should the training workload be smaller and not need the full eight GPUs.

This will prevent customers from paying for GPUs that will be underutilized.

The A3 machines are available through the fully managed Vertex AI, as nodes through Google Kubernetes Engine (GKE), and as VMs through Google Compute Engine.

Eran Dvey Aharon, VP of R&D at Tabnine, said of the smaller VMs: "We use Google Kubernetes Engine to run the backend for our AI-assisted software development product. Smaller A3 machine types have enabled us to reduce the latency of our real-time code assist models by 36 percent compared to A2 machine types, significantly improving user experience."

In a LinkedIn post, Google's group product manager Nathan Beach noted that "other cloud providers like Amazon Web Services, Oracle, and CoreWeave only provide VMs with exactly 8 H100 GPUs per VMs," adding: "Google Cloud is the only major cloud provider that allows you to choose whether you want 1, 2, 4, or 8 Nvidia H100 GPUs per VM."

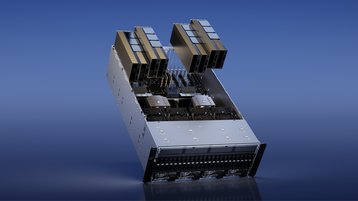

In addition to the smaller A3 VMs, Google has also announced that its A4 VMs powered by Nvidia's HGX B200 GPUs are now available in preview.

The A4 VM is comprised of Blackwell GPUs interconnected by fifth-generation Nvidia NVLin. According to a blog post from Google, the VM offers a "significant performance boost over the previous generation A3 High VM."

Each B200 GPU delivers 2.25 times the peak compute and 2.25 times the HBM capacity of the H100 offering.

Google's Hypercompute Cluster enables customers to deploy and manage large clusters of A4 VMs with compute, storage, and networking as a single unit.

“Nvidia and Google Cloud have a long-standing partnership to bring our most advanced GPU-accelerated AI infrastructure to customers," said Ian Buck, VP and General manager of Hyperscale and HPC, Nvidia.

"The Blackwell architecture represents a giant step forward for the AI industry, so we’re excited that the B200 GPU is now available with the new A4 VM. We look forward to seeing how customers build on the new Google Cloud offering to accelerate their AI mission."