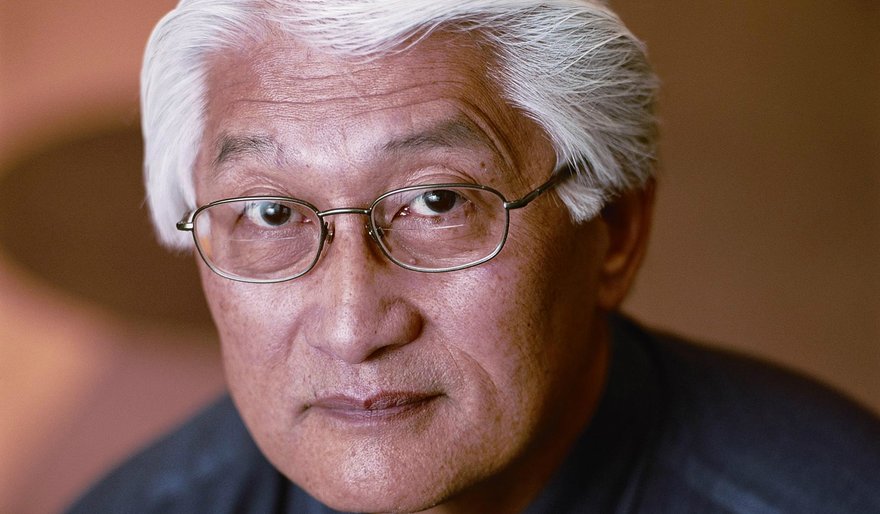

FOCUS: You have worked in hardware and software, for both IBM and Hitachi. Would you call yourself a software or hardware person? And how do these areas tie in with Hitachi Data Systems’ overall storage strategy moving forward?

Hu Yoshida: I guess my roots are in hardware but I have also managed software. As we look towards the Cloud it is going to be more about software – the future will also be less about storage and more about information.

Hitachi has a basic three-step strategy. First to virtualize the infrastructure so that changes here don’t have an impact on access to information and data. We are probably most well-known for this, and this virtualization is now extending beyond storage into the server, software, hypervisors. Providing a virtual converged infrastructure means you can manage a VSAN through Microsoft, Systems Director or OpenStack, for example.

The next thing is to virtualize the data from the application because the apps are also going to change – they are going to age and will need to be refreshed. Sometimes virtualizing the application is more disruptive than virtualizing infrastructure. How do you virtualize data? If you separate data from applications it is just a bunch of bits so you have to put those bits – that data – into a container along with metadata that describes it and policies that govern it. Once you do that you have an ObjectStore.

Now with the metadata you are able to look across these different silos of data – they become first a pool of infrastructure then a pool of data. Then you are able to do analytics to search that data. This is what we call big data.

We have built a virtual infrastructure – Converged Infrastructure – and an Object Storage solution and content platform with the ability to ingest off-the-edge, search metadata to do discovery against it and index it. This is done in a secure encrypted environment so it meets all compliance requirements.

We are now in the information phase, looking at how we merge all these data sets. How do you discover data sitting at the back of a data center that is not accessible to one application, and present it using visualization aspects of the tier? We are moving into that phase now.

What does this mean for your development teams at Hitachi Data Systems?

First we are going to leverage things such as SAP HANA because we are seeing in the business world there is a need for velocity. We are looking at working with Hadoop, some of the open structures such as SQLstream and other open approaches for fast analytics. These are complex event-processing type programs.

We also want to bring in some of the areas we have at Hitachi. We realize there is a need to scale up as we get to virtual servers. Adding more virtual servers to a physical server increases demand on storage to scale up and we also see a need to scale out because you can’t bring the data to the applications. You are going to have to MapReduce the data.

Unstructured data becomes more important and puts more emphasis on files that can scale billions of objects and can be searched without having to do a directory crawl.

How does Hitachi’s approach differ from that seen across the industry?

We have what I call more of a grid architecture. We have an internal switch. If I need processing power in my storage I add a processor on it. If I need more front-end capacity I add front-end processors, or I can add back-end processors if needed.

These are all connected through an internal switch to a large cache. I can continue to scale this and it sees the same data image in one cache. Everyone else builds a controller with a processor front end, back end and cache as a node. To scale this way you need to add another external switch and another node with processor back end, when all they need is cache. This allows for more capacity but it is not going to scale up – you have to come from this port, go to this cache and come back. In our case, no matter what port, you see the same image. This allows us to scale the system vertically because it sees the same cache image vertically and horizontally.

How have you seen your conversations with customers change around big data and infrastructure?

A few years ago the IT department told the business what’s available, and the business made the best use of it. But this has turned on its head. We now see the business demanding services from internal IT. If they can’t provide it they will go find someone who can, because for them it is the life and death of the business.

We try to have more contact with the business team to understand their demands and ensure we have appropriate solutions. We also keep in contact with the IT guys because they need to provide these services. We can’t provide solutions that are fixed because they could be useless in six months time, or they might choose to outsource. We have to be far more flexible. Solutions that would have lasted three years can now last months.

The role of IT will be to ensure they don’t have silos. Look at the virtualization of data, when you are not virtualizing the apps carrying it. Health care is as an example. Companies such as GE and AGFA built machines for doing cardiology and radiology and sold directly to the end user. Now at the same hospital you can have different models of machine doing the same thing but they cannot share information. This is why we see companies move to OpenStack – they see it as a way to avoid vendor lock in.

We have a big customer – I cannot tell you who yet, but it is an online reseller moving to OpenStack. We are its primary provider so we have also moved to OpenStack.

Are you targeting these solutions mainly at the larger enterprise customer?

The SMB guys have to compete against the enterprise players so they need the same price availability, performance and scale. How do we take an enterprise box and make it priced and packaged for the mid-range? A lot of companies go to different architectures such as dual controllers. One controller does the work, the other acts as failover. We have a global cache, just like in an enterprise. We can reduce price by converting a lot of the functions into Asix – front end and back end. I take seven of those functions and reduce it to one LSI. This is how we reduce the price without giving up any functionality. We do this because we are an engineering company. We know how to build back planes and controllers. This is our technical edge.

We have a package called HUS – Hitachi Unified Storage – that does file and block and virtual capacity like our enterprise system. It is priced for the mid-range and can compete with the same availability. The only thing I give up here is mainframe connection.

How big is the services industry becoming for Hitachi?

We now manage car maker BMW’s storage infrastructure from its own premise. We do all the provisioning, capacity management, performance management. Five years ago we would not have been able to do this. Services are a huge change for Hitachi. We now carry the risk of the inventory – the capital expense. But we can do it because we have the tools and ability to migrate environments. We know we can be successful in managed services and software defined because we have the virtualization and architecture we need.

This article will appear in Focus 35, out in May. Sign up for your print or digital edition here.