Cerebras Systems has announced plans to launch six new AI inference data centers across North America and Europe.

According to the company, the data centers will be equipped with “thousands of Cerebras CS-3 systems,” delivering more than 40 million Llama 70B tokens per second. Llama is the open-source large language AI model developed by Meta.

In a post on LinkedIn, Cerebras said this will make it the “world’s number one provider of high-speed inference and the largest domestic high-speed inference cloud.”

The planned locations for the upcoming data centers are Minneapolis, Minnesota; Oklahoma City, Oklahoma; Montreal, Canada; and unnamed locations in the Midwest and East of the US and Europe.

The company has already launched AI inferencing data centers in Santa Clara and Stockton in California and Dallas, Texas, all three of which are currently online.

The Minneapolis facility is slated to go live in Q2 2025, with the Oklahoma City and Montreal sites expected to follow in June and July of the same year.

In Oklahoma City, Cerebras said it will deploy more than 300 CS-3 systems in the Scale Datacenter, “a state-of-the-art Level 3+ computing facility,” complete with Scale’s “custom water-cooling solutions” to make it “uniquely suited to powering large scale deployments.” Tornado and seismically shielded and connected with triple redundant power stations, Cerebras said the facility is “one of the most robust data centers in the United States.”

Meanwhile, the Montreal deployment will be housed in a facility operated by Enovum, a division of Bit Digital, Inc.

The final three undisclosed locations across the US and Europe have a Q4 2025 completion date.

“Cerebras is turbocharging the future of US AI leadership with unmatched performance, scale, and efficiency – these new global data centers will serve as the backbone for the next wave of AI innovation,” said Dhiraj Mallick, COO, Cerebras Systems. “With six new facilities coming online, we will add the needed capacity to keep up with the enormous demand for Cerebras industry-leading AI inference capabilities, ensuring global access to sovereign, high-performance AI infrastructure that will fuel critical research and business transformation.”

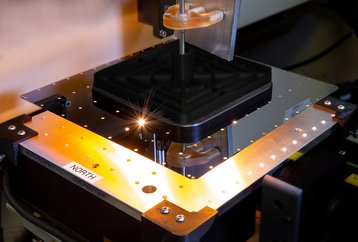

Cerebras Systems develops wafer-scale chips, with its Wafer Scale Engine 3 boasting four trillion transistors and 900,000 'AI cores,' alongside 44GB of on-chip SRAM. Sold as part of the CS-3 system, the company claims the chip is capable of 125 peak AI petaflops.

In August 2024, Cerebras confidentially filed for an IPO with the SEC - although share price and expected market cap have yet to be revealed.