With the arrival of generative AI, coupled with the release of increasingly powerful GPUs, 2023 has seen discussions around high-density workloads in data centers ratchet significantly up.

In recent years, 'high density' has generally meant anything between the low double-digits and up to 30kW. Now, some companies are now talking about operating at densities in the triple-figure kilowatt range.

Air-based cooling has its limitations, and these new high-density designs will have to utilize liquid cooling in some form. But are data center operators – and especially colocation providers – ready for that change?

Data center designs get denser – at least for hyperscalers

Schneider Electric predicts AI-based workloads could grow from 4.3GW to 13.5GW over the next five years, increasing from eight percent of current workloads to 15-20 percent.

“It’s growing faster, but it’s not the majority of the workloads,” Andrew Bradner, general manager, cooling business, Schneider Electric, said during a DCD tour of the company’s cooling system factory in Conselve, Italy.

“They [hypescalers] are in an arms race now, but many of our colocation customers are trying to understand how they deploy at this scale.”

After years of testing, hyperscalers are now liquid cooling in various forms to accommodate increasingly dense chips designed for AI workloads.

“We’re seeing far more activity with large-scale planned deployments focused in the US with your typical Internet giants,” Bradner tells DCD in a follow-up conversation. “Where we see scale starting first is purpose-built, self-built by those Internet giants.”

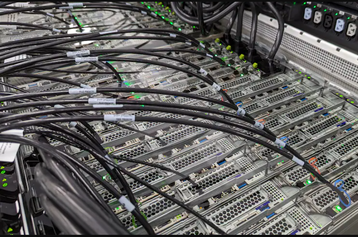

In 2021, Microsoft partnered with Wiwynn to test two-phase immersion cooling in production deployment at a facility in Quincy, Washington. In November 2023, the company revealed a new custom rack design to house its custom AI accelerator chip known as Maia.

The rack design, known as Ares, features a ‘side-kick’ hosting cooling infrastructure on the side of the system that circulates liquid to cold plates and will require some data centers to deploy water-to-air CDUs.

In November 2023, Amazon said its new instances with Nvidia’s GH200 Grace Hopper Superchips would be the first AI infrastructure on AWS to feature liquid cooling.

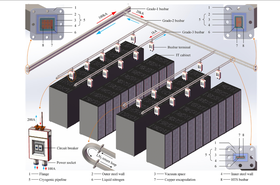

Most notably, Meta paused a large proportion of its global data center buildout in late 2022 as part of a companywide ‘rescope’ as it looked to adapt its designs for AI. The new-design facilities will be partially liquid-cooled, with Meta deploying direct-to-chip cooling for the GPUs, whilst sticking to air cooling for its traditional servers.

The company told DCD that having the hybrid setup allows Meta to expand with the AI market, but not over-provision for something that is still unpredictable. It said it won’t use immersion cooling for the foreseeable future as it isn’t “scalable and operationalized” to the social media’s requirements at this point.

On the colocation and wholesale side, the likes of Digital Realty, CyrusOne, and DataBank are all offering new high-density designs that can utilize air and liquid cooling.

Digital recently said its new high-density colocation service will be in 28 markets across North America, EMEA, and Asia Pacific. The service will offer densities of up to 70kW per rack using Air-Assisted Liquid Cooling (AALC) technologies” – later confirmed to DCD as “rear door heat exchangers on racks.”

DataBank’s Universal Data Hall Design (UDHD) offers a flexible design able to host a mix of high- and low-density workloads. The base design incorporates slab flooring with perimeter cooling – with the option to include raised flooring – while the company has said technologies like rear door heat exchangers or direct-to-chip cooling can be provisioned with “minimal effort.”

2023 saw CyrusOne announce a new AI-specific built-to-suit data center design that can accommodate densities of up to 300kW per rack. The company has said its Intelliscale design will be able to use liquid-to-chip cooling technology, rear door heat exchanger cooling systems, and immersion cooling in a building footprint a quarter of the size of its usual builds.

Densities up, but retail colo yet to see the liquid boom

DCD spoke to a number of retail colocation providers about what they are seeing in terms of density demands vs liquid cooling. While most said that densities are creeping up, it is slow. What liquid interest there is is still direct-to-chip, and there is currently little demand for immersion cooling amongst their wider customer base.

Schneider’s Bradner notes that supply chain constraints around the latest GPUs and AI chips mean the extreme high-density demands are yet to trickle out of hyperscale companies’ own facilities and into their colocation deployments, let alone reach mass availability to the general market: “And until that happens, it’s going to take time for it to be as prevalent around the world,” he says.

“That’s not to say that liquid cooling isn’t growing and people aren’t stacking racks now to 30-40kW. We’re seeing far more requests around the world for higher density. But what’s hard to tell is whether it’s planned density or its actual deployed density; I get a sense a lot of it is more planned density.”

In the meantime, even without the latest and greatest AI hardware, the average densities of colocation facilities are still creeping up.

In its Q3 2023 earnings call, Equinix noted it is seeing average densities creep up across its footprint. CEO Charles Meyers noted that over the first three quarters of 2023, the company had been turning cabinets over that period with an average density of 4kW per cabinet, but adding new billable cabs at an average of 5.7kW.

“The reality is we’ve been paddling hard against that increase in density when it comes to cabinet growth,” he said. “We still see meaningful demand well below that 5.7[kW] and then you see some meaningfully above that. We might see deals that are 10, 15, 20, or more kilowatts per cabinet. We may even be looking at liquid cooling to support some of those very high-density requirements.

“It’s an opportunity for us as we have this dynamic of space being freed up to the extent that we can match that up with power and cool it appropriately using liquid cooling or other means or traditional air-cooling means, then I think that’s an opportunity to unlock more value from the platform.”

Equinix is also deploying liquid cooling for some of its bare metal infrastructure. In February 2023, the colo giant said the company had been testing ZutaCore’s liquid cooling systems at its co-innovation center for a year, and in June 2022 installed some of the two-phase tech in live servers for its metal infrastructure-as-a-service offering.

A rack full of operational two-phase cooling had, at that point, been stable for six months in its NY5 data center in Secaucus, New Jersey.

December 2023 saw the colo giant announce plans to expand support for liquid cooling technologies – including direct-to-chip – to more than 100 of its International Business Exchange (IBX) data centers over 45 metros globally.

Bradner notes many large companies are still in the learning phase on what large-scale deployments will look like: "I’ve spoken to large Internet customers saying they’re looking at doing 40kW racks but aren’t really sure how they’re going do it and might just look at the square meter density and separate the racks more.

“We know that a lot of our colo customers are doing spot deployments of high-density compute,” he says, “but there’s so much segregating of small deployments into the existing infrastructure, which can support that, because you’re not deploying at scale.”

Cyxtera, a retail colocation firm recently acquired by Brookfield, is also seeing customer densities go up generally.

“If we looked at the UK five plus years ago, densities were lucky to be hitting 4kW on average. Now we’re seeing 10+ regularly for customers,” says Charlie Bernard, director of growth strategy, EMEA, Cyxtera. “The environments are densifying and we’re getting much more performance out of less hardware that has high thermal design points (TDPs), along with the influx of hyper-converged infrastructure (HCI) that is pushing up the amount of density you can achieve in a single cabinet.”

Cyxtera says it has 19 sites that can support 70kW+ workloads with a combination of air cooling in cold aisle containment and liquid cooling.

“AI and HPC-specific workloads are pushing limits, and we’re seeing requests of 50kW+ pretty regularly now, we get a few a week, and we have implemented direct-to-chip in the US on mass for some of these requirements,” says Bernard. “But even the traditional workloads we’re seeing; private cloud, SAP clusters, etc. It’s steadily inclining up.”

To date, he says, liquid cooling has been the preserve of very large enterprises and service providers – the majority of which are doing direct-to-chip.

“I think the reasoning behind that is likely the lack of OEM support. The majority of customers aren’t going to move forward with a solution they can’t get fully supported and warranted by the service provider and OEM they’re working with. We’ll see a dramatic uptake once the OEMs are supporting and warranting the hardware and endorsing it to customers.”

Hybrid cooling to become the norm?

While an entirely liquid-based estate might be easier to handle, it would be overkill in environments with a variety of hardware and workload requirements.

This means hybrid deployments that mix air-based cooling with the different varieties of liquid cooling are likely to become the norm as densities creep up.

Schneider’s Bradner tells DCD that for 350W chips, dry air cooling is still viable, but once we reach 500W per chip, companies need to start thinking about water – though without compressors via dry cooler or adiabatic systems. But at 700W chips, operators are going to need mechanical cooling along with water, and free cooling is less likely to be feasible.

“Below 40kW a rack, you have a few more options,” he says. “Some are more efficient than others, but you can use air-based cooling as a way to cool those loads. As you get into 50kW a rack, air-based cooling becomes less viable in terms of efficiency sustainability. And then over 60kW per rack, we don’t have a choice, you really need to go to liquid applications.

“It may not be the most sustainable way, but we’re seeing a lot of people designing for air-based cooling at up to 40kW, and not making an architectural change to go to liquid.”

And, while direct-to-chip might be the most prevalent liquid option today, it still has its limitations, meaning air is still required to take away the leftover heat.

“When you talk about a 50kW load, and you’re only doing 70 percent of that [via direct-to-chip], you have 15kW left. Well, that’s almost like what today’s cooling demand is in most data centers,” says Bradner.

Spencer Lamb, CCO of UK colo firm Kao Data, said the company’s average rack densities across current installations is circa 7-10kW per rack, but density demand is “increasing significantly.”

“We’re now deploying 40kW air-cooled AI racks in our data halls. We achieve this by running CFD analysis, ascertaining how and where our customers require the higher-density racks within their deployments, thereby ensuring the cooling system isn’t compromised.”

Lamb said customers are seeking to deploy both direct-to-chip and immersion cooling, but the company’s design blueprint allows it to deploy either without significant engineering, to complement the existing air-cooled system.

“From a data center engineering viewpoint, keeping these technologies separate is the ideal outcome, but is not at all appropriate for the customers seeking to deploy this technology,” he says. “An end-user acquiring a direct-to-chip liquid cooling platform will more than likely be seeking an adjacent storage and network system which will be air-cooled, so some level of air cooling will be required and this is a trend we see for the foreseeable future.

Direct-to-chip cooling will still generate residual heat and require conditioned air. Moving forward, data halls must be able to accommodate a hybrid approach to successfully house these systems.”

Are colos ready to offer liquid at scale?

While purpose-built liquid-cooled data centers do exist – Colovore in California is among the most well-known examples – most colo providers are facing the challenge of densifying existing facilities and retrofitting liquid cooling into them.

For now, many colo providers will leverage direct-to-chip because the number and scale of high-density deployments are low enough that they can leverage existing facility designs without too much change. But change is set to come.

“There’s a challenge with retrofits before we even got into that realm of high density in legacy sites where it’s complicated just to get to 10-15kW a rack,” says Schneider’s Bradner. “With the question of what happens with even higher densities, they could accept the higher densities but just less of it. But a lot of site power provisioning didn’t contemplate that instead of 10-20MW for a whole building, they might need that just on one floor.”

Cyxtera’s Bernard notes that many operators are still exploring how to standardize, operationalize, and ultimately, productize liquid cooling, all of which throw up multiple questions: “When you look at operationalizing it, we have to ask which of our data centers can support this; which can we tap into water loops, which have the excess capacity we can use utilize for it? How are we going to handle the fluids in terms of fluid handling? Each data center is different; there’s going to be a differential cost around tapping into pipework.”

“One of the challenges right now is the lack of standards with the tanks; they all have slightly different connectors which you have to plug into from a liquid standpoint, some have CDUs, some have a heat exchanger,” he adds. “And once the tanks are in place, how do we take those multiple metrics and feeds that we get from the tanks in terms of temperature, for example, and put those data feeds into our BMS so we can monitor them? There’s no one universal set standard between all the manufacturers; they all have their own unique APIs so far.”

Telehouse, a global retail colo provider which operates a five-building campus in London, tells DCD it is yet to see major interest in liquid cooling, but is seeing that gradually increase in densities across its facilities.

“Most stuff is now in that 8-12kW category as standard,” says Mark Pestridge, senior director of customer experience at Telehouse Europe. “We’re also beginning to see some at 20kW. We don’t need to use immersion or liquid cooling to do that. Some people still only want 2kW in a rack, but we’re definitely seeing a move towards standard high density.”

“We have had a couple of inquiries [around liquid],” says Pestridge, “but we think it’s three to five years away.”

The company is still exploring the market and how different technologies could impact future designs of new buildings or potential retrofits of existing facilities.

Paul Lewis, SVP of technical service at Telehouse Europe, notes that for interconnection-focused operators, questions still remain around how you mix traditional raised floor colo space with liquid that will need a slab design, and then combine that with the meet me rooms.

Cyxtera’s Bernard notes that there may be more regulatory and compliance considerations to factor in, the fact different cooling fluids have different flashpoints, and that different customers may utilize different cooling fluids in different tanks.

SLAs may have to change in the future too, potentially forcing operators to ensure guaranteed flows of water or other fluids.

“Currently, we do temperature, we do humidity, we do power availability, but ultimately now we’re going to have to do availability of water as well," he adds. "That’s not currently an outlined SLA across the board, but I’ve no doubt we’re going to have measurements on us that we have to provide water to the tank with a certain consistency. So that’s that’s another question.”

Bradner also notes redundancy thinking may have to change, especially if SLAs are now tied to water.

“Depending on the designs, liquid systems are sometimes UPS-backed up. But now there’s far more criticality because of what would happen to thermal events if you lost the pumps on a CDU because of loss of power.”

“At lower densities, you had some sort of inertia if you had a chilled water system to keep things going until your gen-sets came on. Now, with those higher densities, that’s a big problem, and how do you manage that and design for that?”